About

Hands‑on HPC / AI, Storage & Infrastructure leader who codes Go & Python full‑stack applications and automates with Ansible, Terraform and GitLab CI. I design software‑defined clouds, SDN and SDS (Ceph, GPFS, VAST & WEKA) for on‑prem and public clouds, having built exabyte‑scale estates, low‑latency GPU clusters and turnkey Kubernetes+Slurm stacks sustaining 99.99 % uptime while driving TCO down by up to 50 %.

I currently lead engineering at White Fiber HPC, a pre‑IPO multi‑region GPU cloud, after founding the decentralized compute marketplace Tunninet. My career spans Bloomberg, Millennium / WorldQuant, NetApp PS and more, consistently bridging business goals with deep technical execution.

Beyond work I explore new tech, travel, and build open‑source projects that push the boundaries of distributed systems and cybersecurity, all running on my private OpenStack + Kubernetes lab.

This very site is served from my own personal Multi Region Openstack & Kubernetes cloud, showcasing my commitment to hands‑on engineering and innovation. I believe in results over words, and this site is a testament to my capabilities and achievements in the tech industry.

Work Experience

(Click to Expand Each Role)

Education

Check out my latest work

Throughout my career, I have architected and delivered a diverse range of solutions, encompassing web and mobile applications, sophisticated automation frameworks, global networking infrastructures, and cutting-edge cloud services. Below are some of the projects I am particularly proud of.

Note: Some Services are Secured & not Optimized for Mobiles.

Tunninet Cloud

I built a complex network mesh spanning 10 geographical regions to share network and compute resources. This forms the cloud that supports my applications via Kubernetes. Not all regions have substantial servers; some are edge locations with policy-based routing to access other regions. There are 4 cloud servers, with redundancy achieved by two in NJ and two in NYC, connected via a mesh & load balanced.

Kubernetes Bootstrap

Due to the current limitations of OpenStack's Magnum project, particularly its lack of support for Dual Stack, I developed my own solution to replace Magnum. Using a combination of Terraform, OpenStack, Ansible, and Cloud-Init, I built a custom bootstrap process to configure and deploy my environment and software stack independently. This cluster is now running all apps seen on this portfolio plus this site.

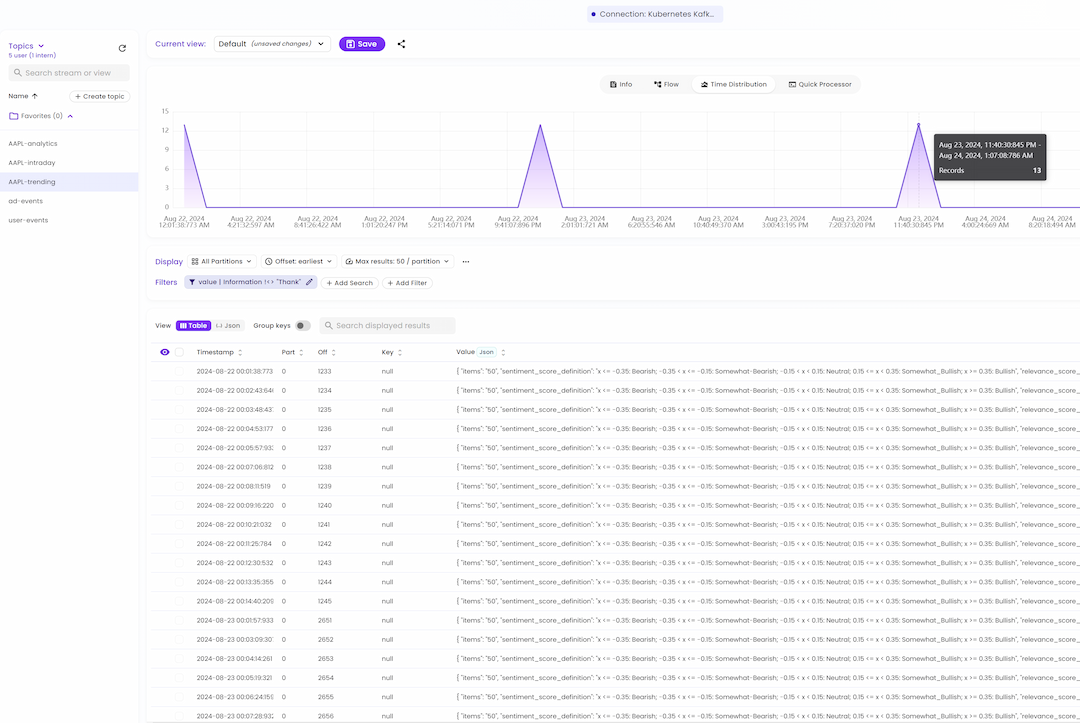

Kafka

I configured Kafka and a dashboard in my Kubernetes environment using CI/CD automation with Ansible and the Kubernetes API. This setup tests applications requiring streaming data, like advertising tech and real-time stock feeds. Below, you can see raw data showing AAPL stock trends, sourced from a producer API and processed via a backend WebSocket for real-time updates.

Grafana Dashboards

Due to the complexity of Kubernetes subsystems, ingresses, and applications, developers and DevOps professionals need to monitor their environments by scraping endpoints into Prometheus time-series databases and visualizing them in dashboards. I deployed a Kubernetes-based Prometheus setup with custom tags to scrape applications with them.

Streaming Advertisement API

I developed an advert simulator using Python, Kafka & MongoDB & more to showcase SSP and DSP marketplace ads. It streams ads based on events like page views, clicks, and purchases, serving relevant ads. Future enhancements include integrating AI (a recommendation engine) and completing the ETL chain beyond random ad sequences.

Kafka-Driven AI/Quant Equities API

The API handles equities analysis, including volume and intraday data via real-time WebSocket. It evaluates trends and makes buy/sell calls based on stable and predicted growth. The React front end and admin portal manage equities, with predictions visualized in Grafana, offering a turnkey quant tool.

Tunninet Custom Router & API's

Tunninet's core product securely connects end-users to the DMZ, allowing only authenticated access while shielding the API. It also creates a decentralized marketplace for compute and AI workloads, matching microservices demand with resources, aiming to become the 'Uber of compute'.

This Biography Website

I built my biography website because results speak louder than words. It’s a dynamic, engaging resume that offers recruiters and colleagues a comprehensive view of my achievements, providing insights beyond a traditional resume, fostering a more meaningful and lasting connection.

Get in Touch

Want to chat? Just shoot me a dm with a direct question on X (Twitter) or Linkedin and I'll respond whenever I can.